My O(n²) solution will beat your O(n) one

A common assumption in algorithm design is that lower time complexity always leads to better performance. However, that's not always the case.

Take the classic Two Sum problem: given an array, find two elements whose sum equals a target value. The naive solution uses a nested loop, resulting in O(n²) time complexity. An optimized approach employs a hash map to achieve O(n) time complexity and O(n) space complexity.

The Twist

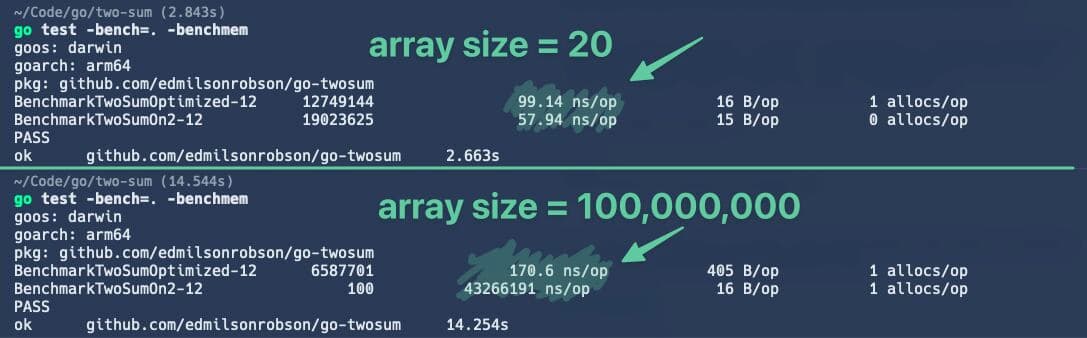

But here's the cool twist: for small arrays (e.g., fewer than 100 elements), the overhead of managing a hash map can outweigh its benefits. In Go, benchmarking reveals that the brute-force method can be up to twice as fast in such scenarios. Performance between the two methods evens out around 200 elements, after which the hash map approach pulls ahead.

This highlights that Big O notation describes scalability, not absolute performance. For small datasets, simpler algorithms with higher time complexity can be more efficient due to lower constant factors and overhead.

It's a valuable reminder to consider practical performance implications, not just theoretical efficiency.